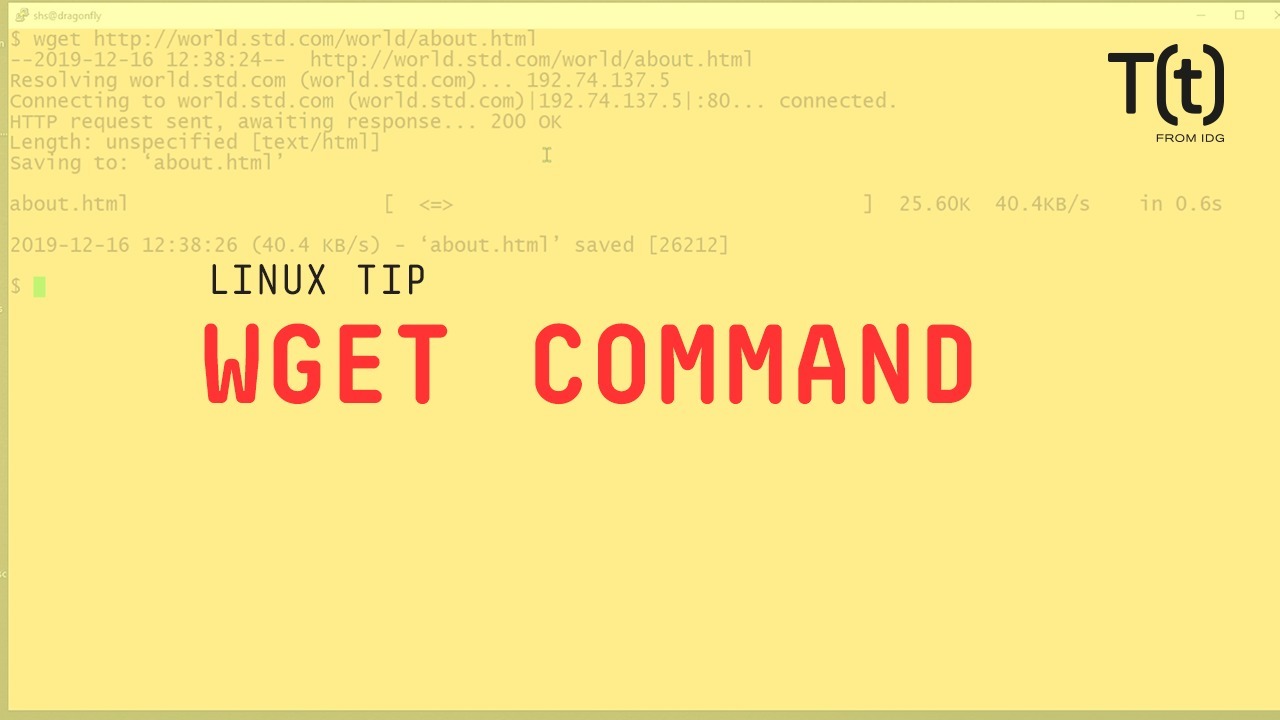

Hi, this is Sandra Henry-Stocker, author of the “Unix as a Second Language” blog on NetworkWorld.

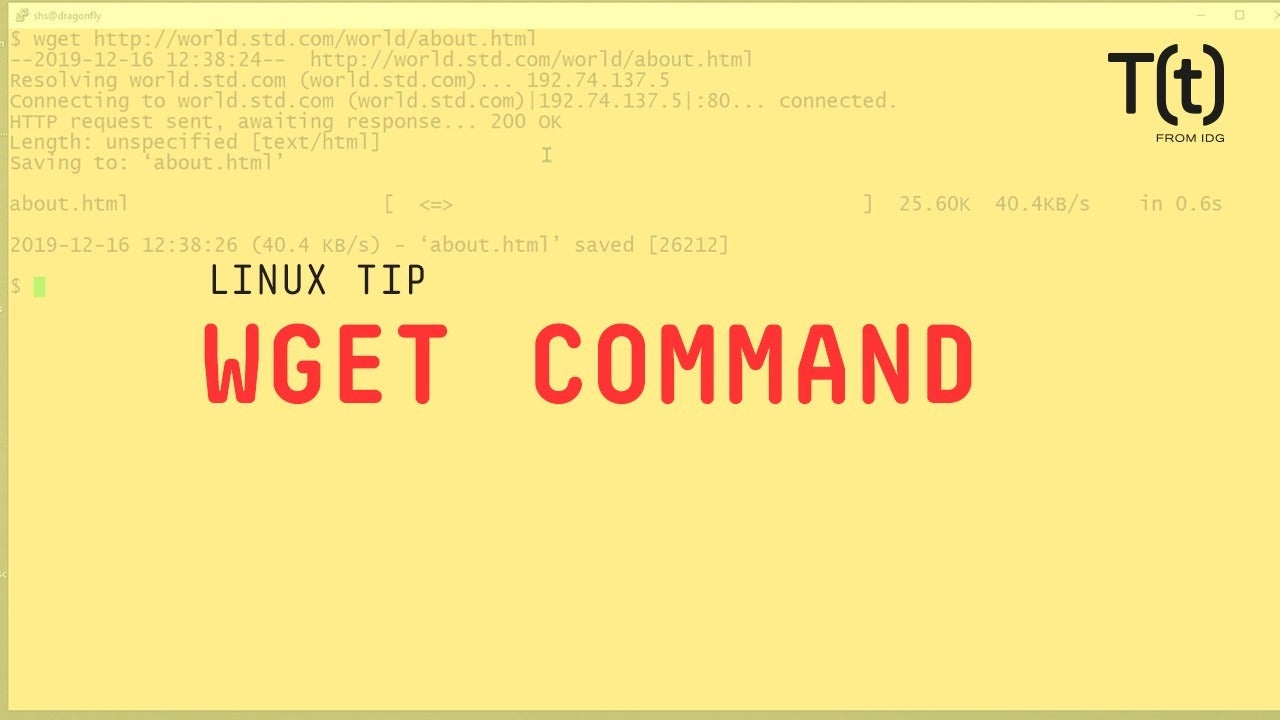

In this Linux tip, we’re going to look at the wget command that makes downloading pages from web sites – even recursively – very easy. In this first example, we’re going to download a single web page:

Keep in mind that this command downloads whatever html code defines the page. If you want your downloaded files to be stored in a site-specific directory, use the -P option to give it a name.

Depending on a site’s settings, using a -r option, you may be able to download a site recursively. wget does this by following the links it finds in the pages. Just be aware that you might be downloading a lot of content. Commands like this can, however, provide an easy way to download a web site for archival purposes.

With -l, you can limit the depth of directories that wget will reach into.

If a download is going to take a long time, you can use -b to download the files in the background. You’ll see something like this:

The wget command can also be used to download packages. To get the latest version of wordpress, for example, you could use a command like this:

That’s your Linux tip for the wget command. If you have questions or would like to suggest a topic, please add a comment below. And don’t forget to subscribe to the IDG Tech(talk) channel on YouTube.

If you liked this video, please hit the like and share buttons. For more Linux tips, be sure to follow us on Facebook, YouTube and NetworkWorld.com.