Run your own AI with VMware: https://ntck.co/vmware

Unlock the power of Private AI on your own device with NetworkChuck! Discover how to easily set up your own AI model, similar to ChatGPT, but entirely offline and private, right on your computer. Learn how this technology can revolutionize your job, enhance privacy, and even survive a zombie apocalypse. Plus, dive into the world of fine-tuning AI with VMware and Nvidia, making it possible to tailor AI to your specific needs. Whether you’re a tech enthusiast or a professional looking to leverage AI in your work, this video is packed with insights and practical steps to harness the future of technology.

🧪🧪Take the quiz and win some ☕☕!: https://ntck.co/437quiz

🔥🔥Join the NetworkChuck Academy!: https://ntck.co/NCAcademy

VIDEO STUFF

—————————————————

Ollama: https://ollama.com/

PrivateGPT: https://docs.privategpt.dev/overview/welcome/introduction

PrivateGPT on WSL2 with GPU: https://medium.com/@docteur_rs/installing-privategpt-on-wsl-with-gpu-support-5798d763aa31

**Sponsored by VMWare by Broadcom

SUPPORT NETWORKCHUCK

—————————————————

➡️NetworkChuck membership: https://ntck.co/Premium

☕☕ COFFEE and MERCH: https://ntck.co/coffee

Check out my new channel: https://ntck.co/ncclips

🆘🆘NEED HELP?? Join the Discord Server: https://discord.gg/networkchuck

STUDY WITH ME on Twitch: https://bit.ly/nc_twitch

READY TO LEARN??

—————————————————

-Learn Python: https://bit.ly/3rzZjzz

-Get your CCNA: https://bit.ly/nc-ccna

FOLLOW ME EVERYWHERE

—————————————————

Instagram: https://www.instagram.com/networkchuck/

Twitter: https://twitter.com/networkchuck

Facebook: https://www.facebook.com/NetworkChuck/

Join the Discord server: http://bit.ly/nc-discord

AFFILIATES & REFERRALS

—————————————————

(GEAR I USE…STUFF I RECOMMEND)

My network gear: https://geni.us/L6wyIUj

Amazon Affiliate Store: https://www.amazon.com/shop/networkchuck

Buy a Raspberry Pi: https://geni.us/aBeqAL

Do you want to know how I draw on the screen?? Go to https://ntck.co/EpicPen and use code NetworkChuck to get 20% off!!

fast and reliable unifi in the cloud: https://hostifi.com/?via=chuck

– Setting up Private AI on your computer

– Offline AI models like ChatGPT

– Enhancing job performance with Private AI

– VMware and Nvidia AI solutions

– Fine-tuning AI models for specific needs

– Running AI without internet

– Privacy concerns with AI technologies

– Surviving a zombie apocalypse with AI

– VMware Private AI Foundation

– Nvidia AI enterprise tools

– Connecting knowledge bases to Private GPT

– Retrieval Augmented Generation (RAG) with AI

– Installing WSL for AI projects

– Running LLMs on personal devices

– VMware deep learning VMs

– Customizing AI with VMware and Nvidia

– Private GPT project setup

– Leveraging GPUs for AI processing

– Consulting databases with AI for accurate answers

– VMware’s role in private AI development

– Intel and IBM partnerships with VMware for AI

– Running local private AI in companies

– NetworkChuck’s guide to private AI

– Future of technology with private and fine-tuned AI

**00:00** – Introduction to Private AI and Setup Guide

**00:56** – VMware’s Role in Private AI

**01:50** – Understanding AI Models and Exploring Hugging Face

**02:54** – Training and Power of AI Models

**04:24** – Installing Ollama for Local AI Models

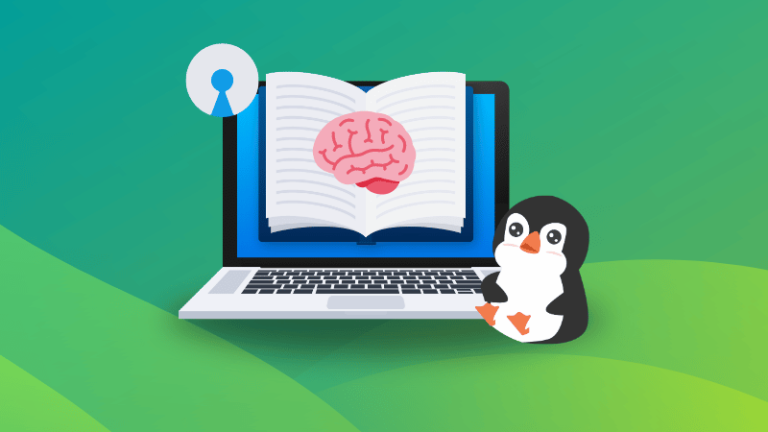

**05:24** – Setting Up Windows Subsystem for Linux (WSL) for AI

**06:53** – Running Your First Local AI Model

**07:23** – Enhancing AI with GPUs for Faster Responses

**08:02** – Fun with AI: Zombie Apocalypse Survival Tips

**08:28** – Switching AI Models for Different Responses

**09:04** – Fine-Tuning AI with Your Own Data

**10:50** – VMware’s Approach to Fine-Tuning AI Models

**12:53** – The Data Scientist’s Workflow with VMware and NVIDIA

**15:23** – VMware’s Partnerships for Diverse AI Solutions

**16:26** – Setting Up Your Own Private GPT with RAG

**18:08** – Bonus: Running Private GPT with Your Knowledge Base

**20:55** – The Future of Private AI and VMware’s Solution

**21:28** – Quiz Announcement for Viewers

#vmware #privategpt #AI

Running your own private AI model allows you to maintain full control over your data, ensure confidentiality, reduce dependency on third-party APIs, and tailor the model’s performance for your specific needs. This is increasingly relevant for developers, agencies, and organisations prioritising privacy, security, and cost-efficiency.

Below is a comprehensive guide on how to run your own private AI, covering essential components, tools, and hosting options:

Why Run Your Own Private AI Model?

1. Data Privacy & Security:

Your data never leaves your server. This is critical for industries dealing with sensitive information like legal, healthcare, finance, or defence.

2. Customisation & Fine-Tuning:

You can fine-tune the model on your own domain-specific data, improving accuracy and relevance for your use cases.

3. Cost Control:

Avoid monthly API fees from cloud providers. Self-hosted models may have upfront costs, but offer better long-term scalability.

4. Offline Capability:

Ideal for air-gapped networks, disaster recovery setups, or environments without internet access.

Types of AI Models You Can Run Privately

1. Language Models (LLMs)

-

Chat assistants, content generators, semantic search

-

Examples: LLaMA 3, Mistral, Falcon, GPT-J, GPT-NeoX

2. Image Models

-

Image generation (e.g., Stable Diffusion)

-

Image classification or recognition (ResNet, YOLO)

3. Voice/Audio Models

-

Whisper (speech-to-text)

-

Bark / Tortoise (text-to-speech)

4. Multi-modal Models

-

Combine text, image, and audio inputs

-

Examples: OpenFlamingo, Llava

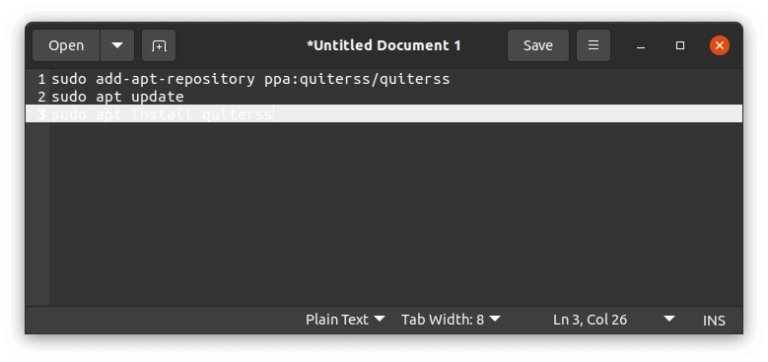

Popular Open-Source AI Models

| Model Name | Use Case | Hardware Requirements | License |

|---|---|---|---|

| LLaMA 2/3 | Chat, writing, search | Mid-high (8GB+ VRAM) | Meta’s community license |

| Mistral 7B | Fast, multilingual LLM | Moderate | Apache 2.0 |

| GPT-J / GPT-NeoX | Conversational, general NLP | High | MIT |

| Stable Diffusion | Image generation | 6GB+ VRAM GPU | OpenRAIL |

| Whisper | Speech-to-text | Low-moderate | MIT |

How to Run Your Own AI Model Privately

1. Choose Your Model & Use Case

-

Do you need a chatbot? → Use LLaMA, Mistral, or GPT-NeoX

-

Do you need image generation? → Use Stable Diffusion

-

Do you want private transcription? → Use Whisper

2. Set Up Your Hardware

Local Machine Requirements:

-

Minimum 8GB RAM

-

GPU with 6GB–24GB VRAM (NVIDIA preferred)

-

SSD storage (20–100GB depending on the model)

Alternative:

-

Run on a private cloud VM (e.g., Proxmox, ESXi, VirtualBox)

-

Use Raspberry Pi or Jetson Nano for edge AI (lower performance)

3. Choose an AI Runtime Environment

Install one of the following:

-

Ollama – Easiest way to run LLaMA, Mistral, etc. locally

-

Text Generation WebUI – Web-based interface for chatting with various models

-

LM Studio – Desktop app for running local models

-

AutoGPTQ or ExLlama – Optimised inference for quantised models (faster, less memory)

-

RunPod / Paperspace / Docker – For containerised private deployment

4. Download & Deploy the Model

For example, with Ollama:

Or use Hugging Face:

You can also deploy through Docker:

5. Add a User Interface (Optional)

-

Gradio or Streamlit for simple web apps

-

LangChain or LlamaIndex for chaining AI tasks

-

Chatbot UI – Modern frontend for custom chatbots

Fine-Tuning Your Model (Optional)

Use LoRA (Low-Rank Adaptation) or QLoRA for fine-tuning models on your own text data without needing massive compute.

Toolkits include:

-

PEFT by Hugging Face

-

Axolotl

-

Colossal-AI

-

AutoTrain (Hugging Face)

Hosting Options for Private AI

| Hosting | Description | Best For |

|---|---|---|

| On-Premise | Full control, no data leaves site | High-security organisations |

| Local Desktop/Workstation | Good for testing, development | Freelancers, developers |

| Private Cloud (Proxmox, VMware) | Host LLMs in isolated environments | Agencies, SMEs |

| Self-hosted VPS (no external API) | Dedicated VPS with SSH access | Budget-friendly, private inference |

Security & Privacy Considerations

-

Use firewalls or reverse proxies to restrict access

-

Enable SSL if exposing to LAN/WAN

-

Isolate models handling PII

-

Log and monitor usage for audit trails

-

Avoid uploading sensitive data to public training sets

Conclusion

Running your own private AI puts the power of large language models, image generators, and audio tools directly in your hands—without compromising your data or freedom. Whether you’re building a secure in-house assistant, enhancing productivity tools, or deploying privacy-first applications, private AI models offer flexibility, performance, and autonomy.

Would you like a tutorial tailored to a specific model like LLaMA 3, Mistral, or Stable Diffusion? Or a ready-to-deploy Docker setup for your server?