Hello, containers? This is SOE, your standard operating environment. Remember me? Obviously not, as you’ve led everyone to believe that they can use whatever technology they want whenever they want, without having a negative impact on the ability to build, maintain, and sustain highly automated application fleets. You know better than that, containers—it’s simply not true. You need me—enterprises need me. Let’s get back together.

In the not-so-distant past, everyone had a standard operating environment. SOEs—which typically include the base operating system (kernel and user space programs), custom configuration files, standard applications used within an organization, software updates, and service packs—are designed to increase the security posture of the environment, simplify processes and automate code. Admins implement an SOE as a disk image, kickstart, or virtual machine image for mass deployment within an organization.

SOEs can apply to servers, desktops, laptops, thin clients, mobile devices, and container images. Yes, even container images. In fact, an SOE can reduce the time it takes to deploy, configure, maintain, support, and manage containerized applications.

So, why have containers basically abandoned SOEs?

Lamenting a loss of standards

One school of thought says that standardization gets in the way of innovation and generally slows the development and deployment process. Here’s the thing, though: It’s quite natural for development teams to have standards for code quality, syntax, and even how to set up new development environments on laptops. There’s a saying: Slow is steady, steady is smooth, smooth is fast. You can help developers move fast and well by standardizing on a container base image.

And, while everyone no doubt agrees that standardization increases security, anti-SOE’ers argue that containers are small and thus have a small attack surface. Sure, one container has a small attack surface, but how many organizations use just one container? When the number of containers in your fleet grows to hundreds or thousands, your attack surface grows—in size and complexity—as well.

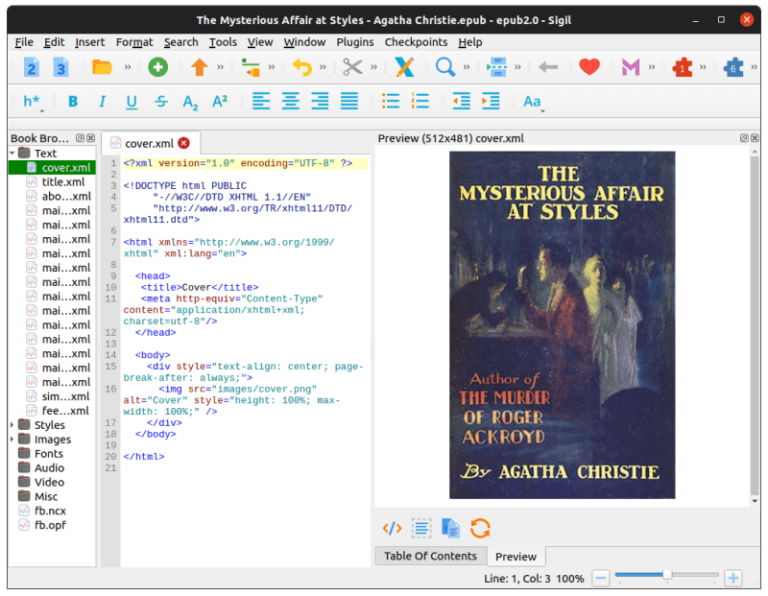

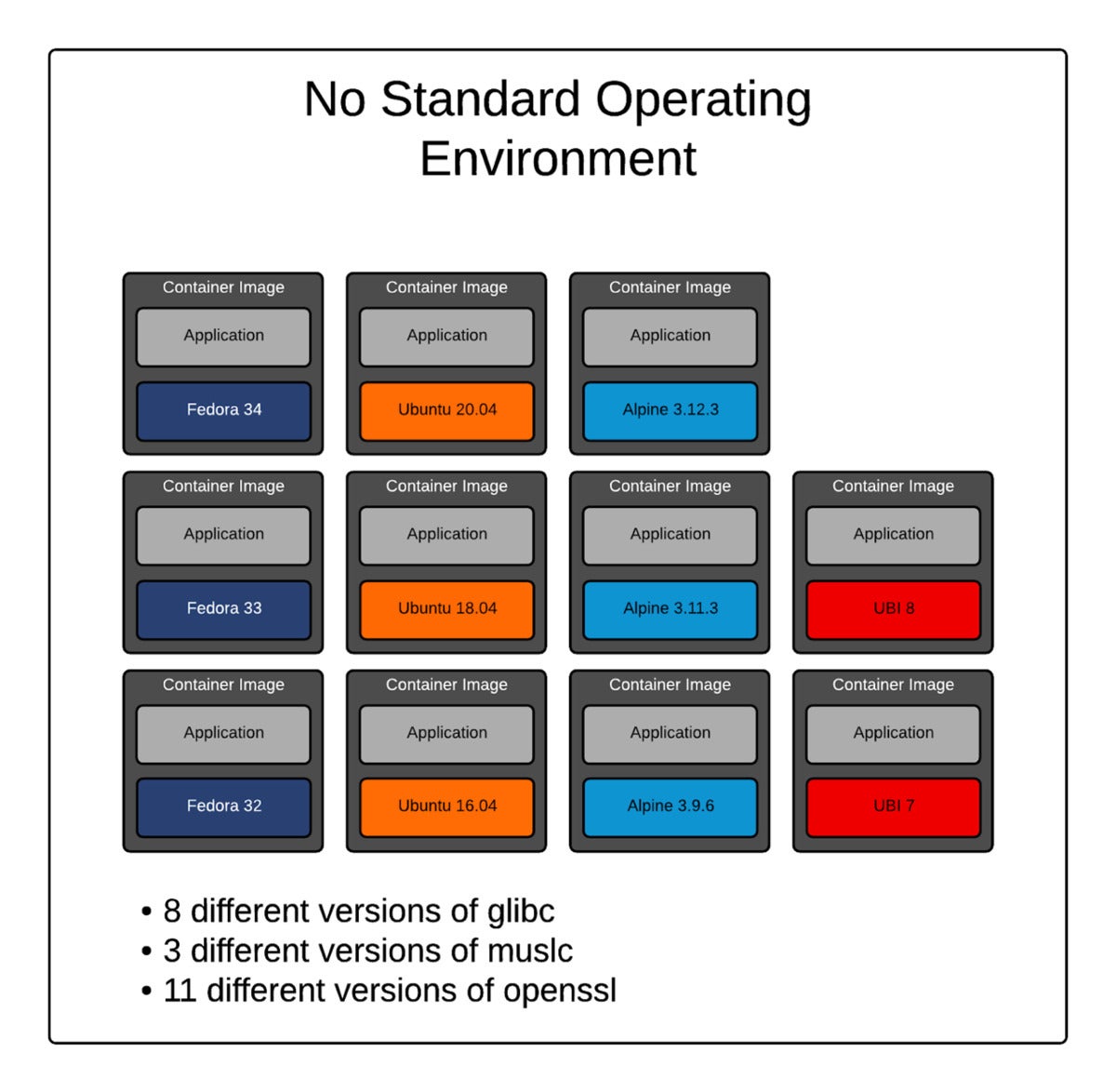

The graphic below demonstrates just how quickly the permutations and attack surface explode in a non-standardized environment. C libraries and OpenSSL alone have a combined total of 22 different package versions to track and patch. This model just doesn’t scale.

Red Hat

Red HatIndeed, there are two good (actually, really good) and big (really big) reasons for reuniting containers and SOEs: people and processes.

Give the people what they need

For each piece of software in an organization, there has to be a subject matter expert (SME) who is responsible for it. There are specialists in the operating system itself, in the different databases, in Java, Python, DNS, web servers, and so on.

The SME model doesn’t change when software is deployed in containers. SMEs still design the best architecture, specify default configurations, determine how backups work, architect where data lives, etc. And the model doesn’t apply just to services like databases, DNS, and caching layers; it also includes the application stack for software written from scratch. Stated another way, even when building new applications, there must be SMEs for things like Ruby, Node.js, Rust, Python, C/C++, Golang, Java, and .NET—not to mention all of the frameworks commonly used with these languages.

Standardizing on a single, high-quality, and secure Linux base image simplifies the life of these SMEs. They can focus on their areas of expertise instead of evaluating Linux libraries and doing bake-offs. It also reduces frustrating interactions among developers, application administrators, security specialists, and even operations teams that run the fleet of underlying servers (especially if the underlying servers are built with the same Linux distribution).

Having a standard container image also makes it easier to hire new people. When you hire new developers or SREs, it will be easier for them to get up to speed. It will place lower cognitive load on senior developers. It will make the lives of operations teams easier on bank holidays.

Why’d you have to go and make processes so complicated?

Complex processes are no good for an organization trying to move faster and be more agile. We learned this lesson in the world of devops and configuration management, but we seem to have forgotten it with containers.

When we standardize on a single container base image, we can simplify processes. Imagine you are a tired SRE who is troubleshooting a containerized service at, oh, 2 a.m. (Because isn’t that always the time you are running through troubleshooting processes?) With a standardized container base image you know what to look for and where, as opposed to searching for a configuration file in multiple places (/etc/httpd/conf/httpd.conf versus /etc/apache2/apache2.conf, anyone?).

If there’s a DNS problem with a Redis container, you know the container will have the exact same configuration as the MySQL or Varnish containers you are familiar with. What this means is that you can fix the DNS once in the base image, and all of the other containers will then inherit the fix. If there’s a problem with timezone (because if it’s not DNS, it’s time), the timezone data can be updated once in the base image and it will be fixed in every service. Time and DNS are two of the most common things to break, and also come from the container base image, not the application software sitting on top. People forget how much of an application’s behavior is determined by configuration that comes from the container base image.

What about when the CI/CD system breaks? Especially, when nobody has changed any code that would affect it? That’s fun, right? No, it’s more like shaving yaks. Standardizing on a single base image with a long lifecycle and good ABI/API prevents unintended breaks in the CI/CD system. This is a critical factor missed by many really smart people. The CI/CD system is essentially a set of organizational processes structured in constantly running code. When this breaks, value creation screeches to a halt. Fixing it costs money, but doesn’t create any new value for the organization. Fixing it just gets you back to par. Simplify the CI/CD system by using a single Linux container base image everywhere, and you will see fewer problems across a fleet of applications. Standardize and you can fix many problems in a single place.

Does using an SOE mean taking away all control from developers? No. Give them control over the higher-level, higher-value components in the stack. Give them control over what web frameworks they choose. Give them control over what encryption to use when saving a credit card number. Give them control over which language to use for the application. But don’t allow them to use 22 different Linux base images just because it’s possible.

SOEs and containers: Reunited and it feels so good

Even in the world of cloud native and containers, a standard operating environment matters. The set of criteria that should be used to evaluate container base images is quite similar to what we’ve always used for Linux distributions.

Evaluate things like security, performance, how long the life cycle is (you need a longer life cycle than you think), how large the ecosystem is, and what organization backs the Linux distribution used. (See also: A Comparison of Linux Container Images.) Start with a consistent base image across your environment. It will make your life easier. Standardizing early in the journey lowers the cost of containerizing applications across an organization.

Also, don’t forget about the container host. Choose a host and standardize on it. Preferably, choose the host that matches the standard container image. It will be binary compatible, designed and compiled identically. This will lower cognitive load, complexity of configuration management, and interactions between the application administrators and operations teams responsible for managing the fleet of servers underlying your containers.

A standard operating environment still matters with containers. In fact, all of the automation involved and pressure to move faster makes an SOE more important, not less so.

At Red Hat, Scott McCarty helps to educate IT professionals, customers and partners on all aspects of Linux containers, from organizational transformation to technical implementation, and works to advance Red Hat’s go-to-market strategy around containers and related technologies.

—

New Tech Forum provides a venue to explore and discuss emerging enterprise technology in unprecedented depth and breadth. The selection is subjective, based on our pick of the technologies we believe to be important and of greatest interest to InfoWorld readers. InfoWorld does not accept marketing collateral for publication and reserves the right to edit all contributed content. Send all inquiries to newtechforum@infoworld.com.