Large Language Models (or LLMs) are machine learning models (built on top of transformer neural network) that aim to solve language problems like auto-completion, text classification, text generation, and more. It is a subset of Deep Learning.

The “large” refers to the model being pre-trained by a massive set of data (could be in sizes of petabytes), accompanied by millions or billions of parameters and further fine-tuned for a specific use-case.

While LLMs made projects like ChatGPT and Google Gemini possible, they are proprietary. So, there’s a lack of transparency, and the entire control of the LLM (and its usage) is at the discretion of the company that owns it.

So, what’s the solution to this? Open source LLMs.

With an open-source LLM, you can have multiple benefits like transparency, no vendor lock-in, free to use for commercial purposes, and total control over customization of the model (in terms of performance, carbon footprint, and more).

Similar reasons why we need open-source ChatGPT chatbot alternatives:

📋

Now that you know why we need open-source LLMs, let me highlight some of the best ones available.

1. Falcon 180B

The Technology Innovation Institute (TII) in the United Arab Emirates (UAE) launched an open LLM, which performs close to its proprietary competitors.

The model includes 180 billion parameters and was trained on 3.5 trillion tokens.

In the Falcon AI model family, there are smaller/newer models like Falcon 2 11B, which is a scalable solution, and outperforms similar models from Meta and Google.

💡

• License: Falcon-180B TII License (based on Apache 2.0)

• Usage: Allowed for commercial use and should be fine-tuned for specific tasks

2. Dolly 2.0

Dolly 2.0 is one of the most prominent open-source LLM models developed by Databricks, fine-tuned on a human-generated instruction dataset. Unlike its predecessor, the dataset is its original, generated by more than 5000 employees of the company.

It includes 12 billion parameters, and is geared towards summarization, QA, classification, and some brainstorming. Dolly 2.0 does not aim to compete with existing models, but the focus was more on providing a human-generated dataset along with an LLM. They claimed it to be the first of its kind back then that tried to mimic ChatGPT’s interaction.

💡

• Parameters: 12 billion

• License: MIT

• Usage: Allowed for research and commercial use

📋

Many open-source LLMs offer variations of the models, fine-tuned for a specific task. You must check the license for them; it may not be the same as the one listed here.

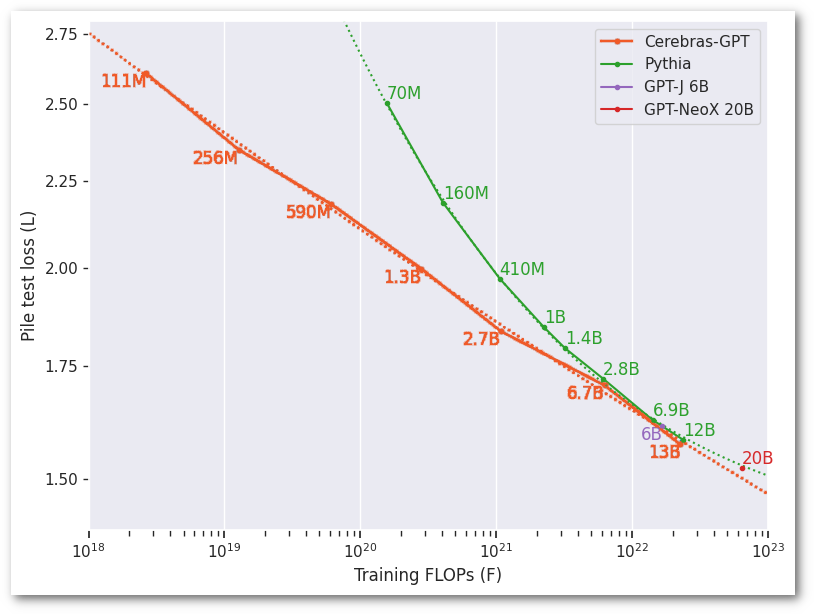

3. Cerebras-GPT

Cerebras-GPT is a family of seven GPT-3 open-source LLM models ranging from 111 million to 13 billion parameters.

These models aim for higher accuracy with a faster training times, lower cost, and consume less energy. It is trained using DeepMind’s Chinchilla formula.

💡

• Parameters: 12 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use and fine-tuned for ChatGPT like interaction

- How to build a website with WordPress and what are the best plugins to use Building a website with WordPress is an excellent choice due to its versatility, ease of use, and a vast array of plugins that enhance functionality. Here’s a comprehensive guide to building a WordPress website, along with recommendations for the best plugins

- Top WordPress Plugins for Managing Ads and Monetizing Your Website Effectively: Why is Ads Management Important for Website Monetization? Strategic ad placement throughout the website enables publishers to maximize ad revenue while ensuring a positive user experience. The positioning of ads is critical in capturing users’ attention without being intrusive or disruptive. By understanding user behavior and preferences, publishers can make informed decisions regarding ad placement to ensure that the ads are relevant and engaging.

- Top Directory Plugins for WordPress to Create Professional Listings and Directories: If you are interested in establishing professional listings and directories on your WordPress website, the following information will be of value to you. This article will present the top directory plugins available for WordPress, which include GeoDirectory, Business Directory Plugin, Sabai Directory, Connections Business Directory, and Advanced Classifieds & Directory Pro.

- The Most Important Stages and Plugins for WordPress Website Development: Developing a WordPress website requires careful planning, execution, and optimisation to ensure it is functional, user-friendly, and effective. The process can be broken into key stages, and each stage benefits from specific plugins to enhance functionality and performance. Here’s a detailed guide to the most important stages of WordPress website development and the essential plugins for each stage.

- .org vs .com: A Top Guide to the Differences in Domain Extension

When you set up a website for a business or a non-profit organisation, you might think the most important part of the address is the actual name. But the domain extension (the bit that comes after the dot) is just as important for telling people what your site is all about. - The Best WordPress Plugins for Image Optimization to Improve Load Times and SEO. The pivotal element lies in image optimization. This discourse delves into the significance of image optimization for websites and its impact on load times. Furthermore, we will delve into the advantages of leveraging WordPress plugins for image optimization, such as streamlined optimization processes, enhanced SEO, expedited load times, and an enriched user experience.

- What is a data center or Internet data center? The term “data center” has become very common due to the role it plays in many of our daily activities. Most of the data we receive and send through our mobile phones, tablets and computers ends up stored in these data centers — which many people refer to as “the Cloud”, in a more generic way.

4. Bloom

Bloom is an open-access multilingual LLM model that is trained to continue text from prompts, and can be trained for other text-based tasks.

The model includes 176 billion parameters, and can output in 46 different languages and 13 programming languages.

💡

• Parameters: 176 billion

• License: RAIL License v1.0

• Usage: Allowed for research and non-commercial entities

5. DLite V2

DLite by AI Squared aims to present lightweight LLMs that you can use almost anywhere.

The family of model includes variations ranging from 123 million to 1.5 billion parameters. It utilizes the same database built by Databricks for Dolly 2.0. Hence, it is tuned for the same use-case.

💡

• Parameters: 1.5 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use and fine-tuned for ChatGPT like interaction

6. MPT-7B

MPT-7B is another prominent open-source model by Databricks. Unlike Dolly 2.0, it competes and surpasses Meta’s LLaMA-7B in many ways.

The model was trained using a mixed set of data from various sources, including 6.7 billion parameters. It also aims to be an affordable alternative to the closed source LLM models available.

💡

• Parameters: 6.7 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use and fine-tuned for ChatGPT like interaction

7. XGen 7B

XGen 7B is an LLM model by Salesforce, one of the leading companies in its industry. The model has been trained for text generation and code tasks, claiming performance benefits over similar models like MPT.

💡

• Parameters: 6.7 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use and fine-tuned for text generation/code tasks

8. OpenLLaMA

OpenLLaMA is a reproduction of Meta’s popular LLaMa model trained with a different dataset to make it available under a permissive license, when compared to Meta’s custom commercial license.

Currently, you can find OpenLLaMA 7Bv2 and a 3Bv3 model, with all of them trained using a mixture of datasets.

You can learn more about it on its GitHub page.

💡

• Parameters: 7 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use

9. StarCoder

StarCoder is an interesting open-source LLM trained using data from GitHub (commits, issues etc) fine-tuned for coding tasks.

It includes 15 billion parameters and trained on 80+ programming languages. Considering the data is from GitHub, you will need to add attribution wherever necessary when you use the model.

💡

• License: Open RAIL-M v1

• Usage: Allowed for research and commercial use (with restrictions not to misuse it)

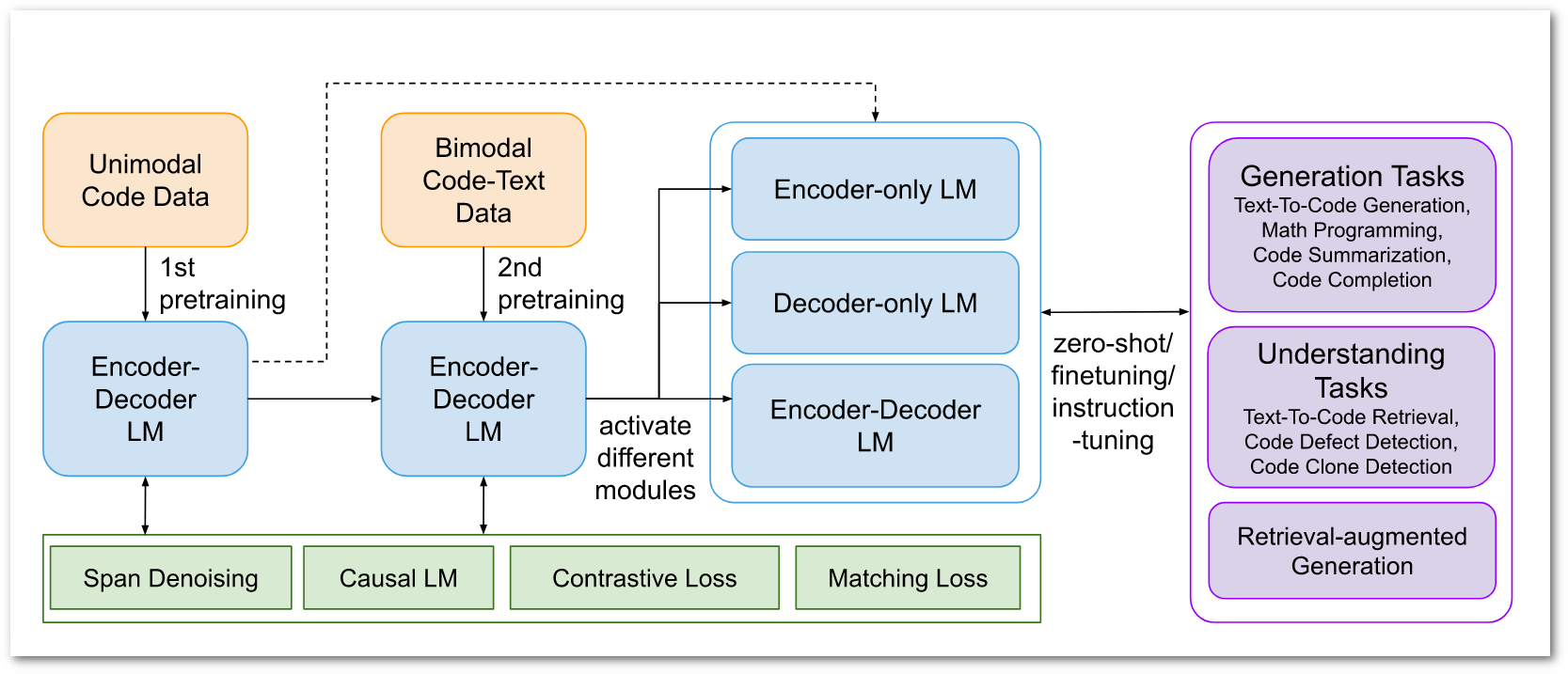

10. CodeT5

Another open-source from Salesforce, fine-tuned for coding tasks and code generation. CodeT5 is one of the most competing code LLMs that features 16 billion parameters, and claims to outperform OpenAI’s code-cushman001 model.

💡

• Parameters: 16 billion

• License: BSD 3-Clause

• Usage: Allowed for research and non-commercial use

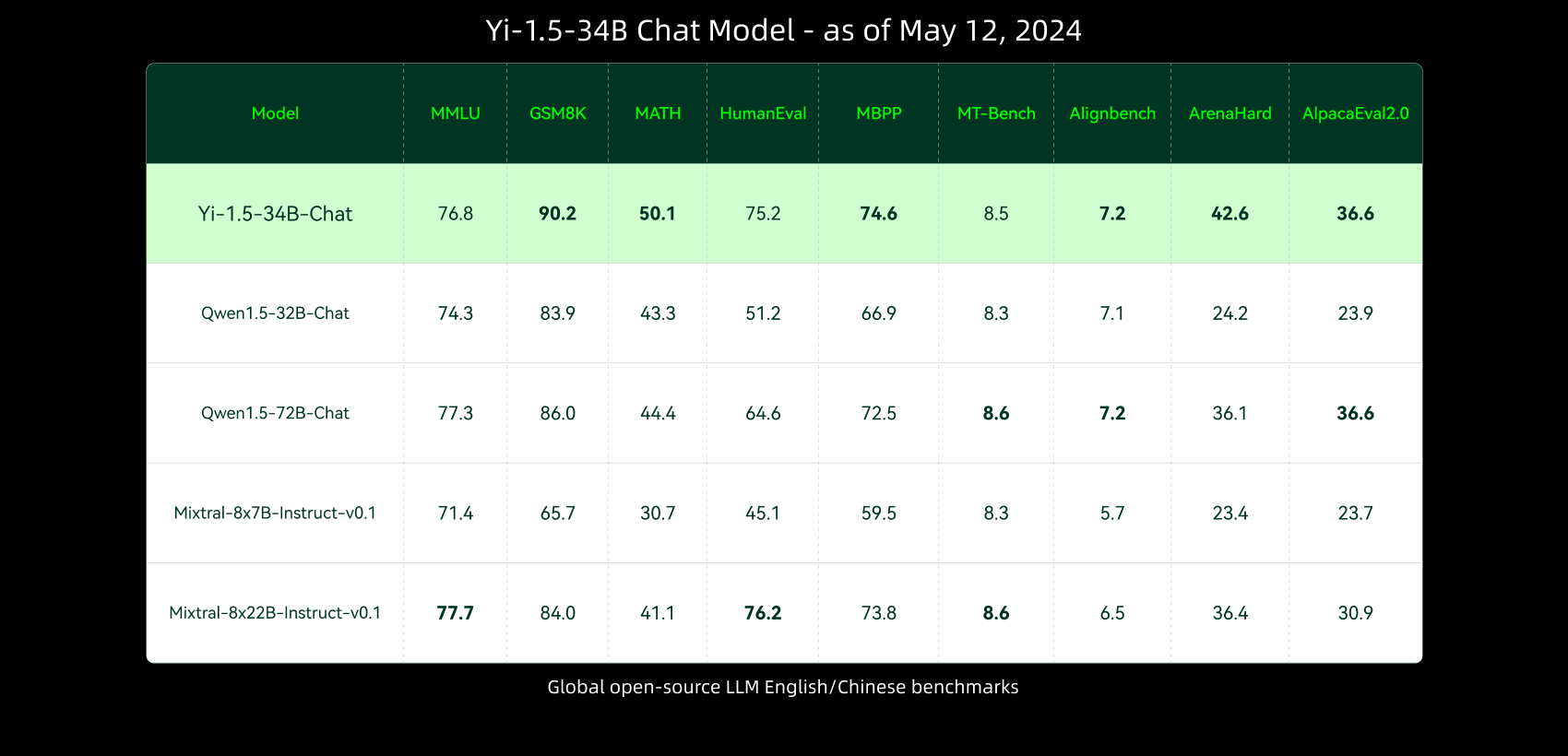

11. Yi-1.5

Yi-1.5 is an exciting open-source LLM featuring a whopping 34.4 billion parameters.

It is fine-tuned for coding, math, reasoning, and instruction-following capability. Originally, this model wasn’t open-source, but later it was. And, that’s a good thing.

💡

• Parameters: 34.4 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use

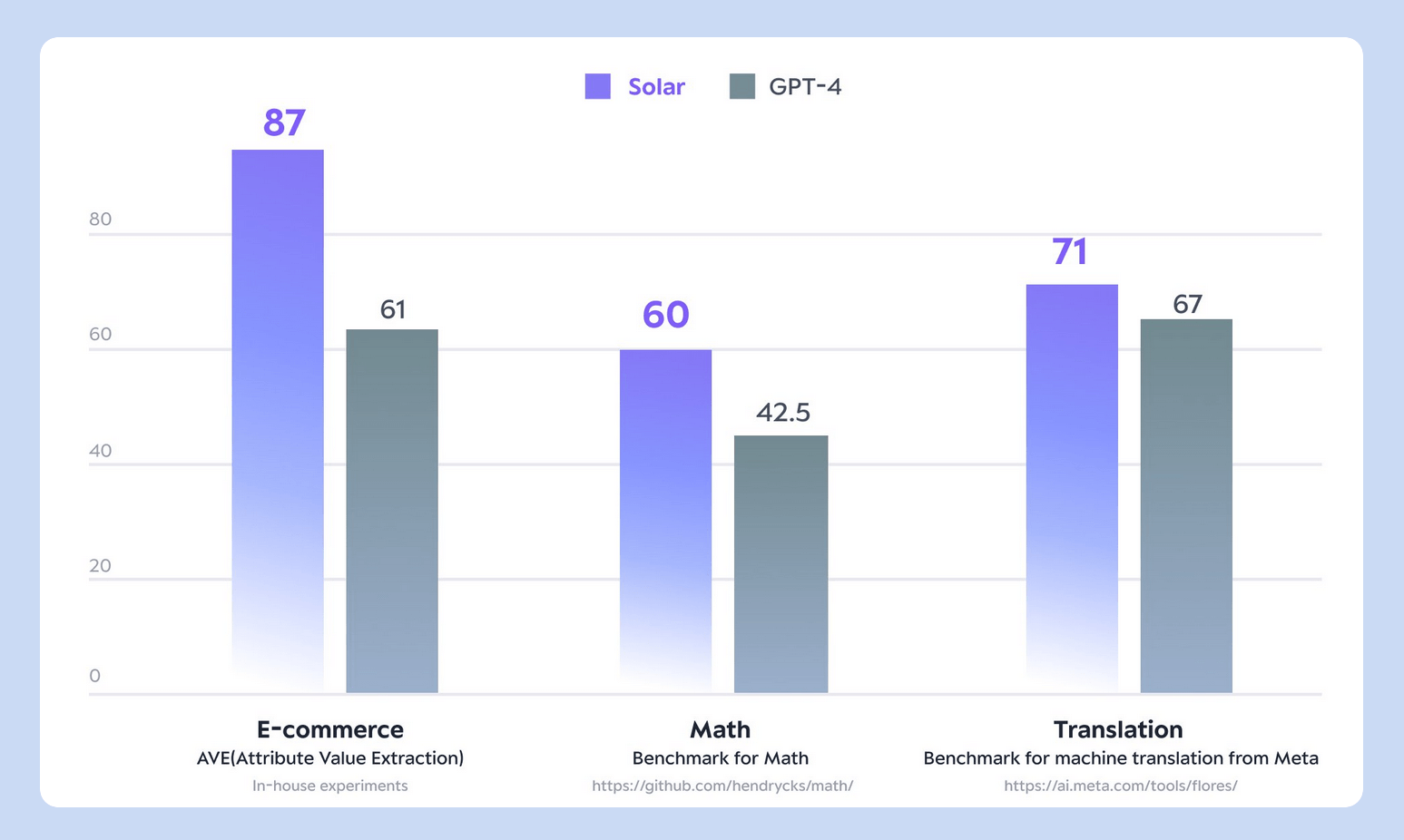

12. SOLAR-10.7B

SOLAR-10.7B is a pre-trained open-source LLM by Upstage.ai that just generates random text. You need to fine-tune it to adapt to your particular requirements.

When compared to models with larger parameters, it promises to provide a good model performance efficiency and scalability even with 10.7 billion parameters. It is an incredibly popular adaptable language model.

💡

• Parameters: 10.7 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use

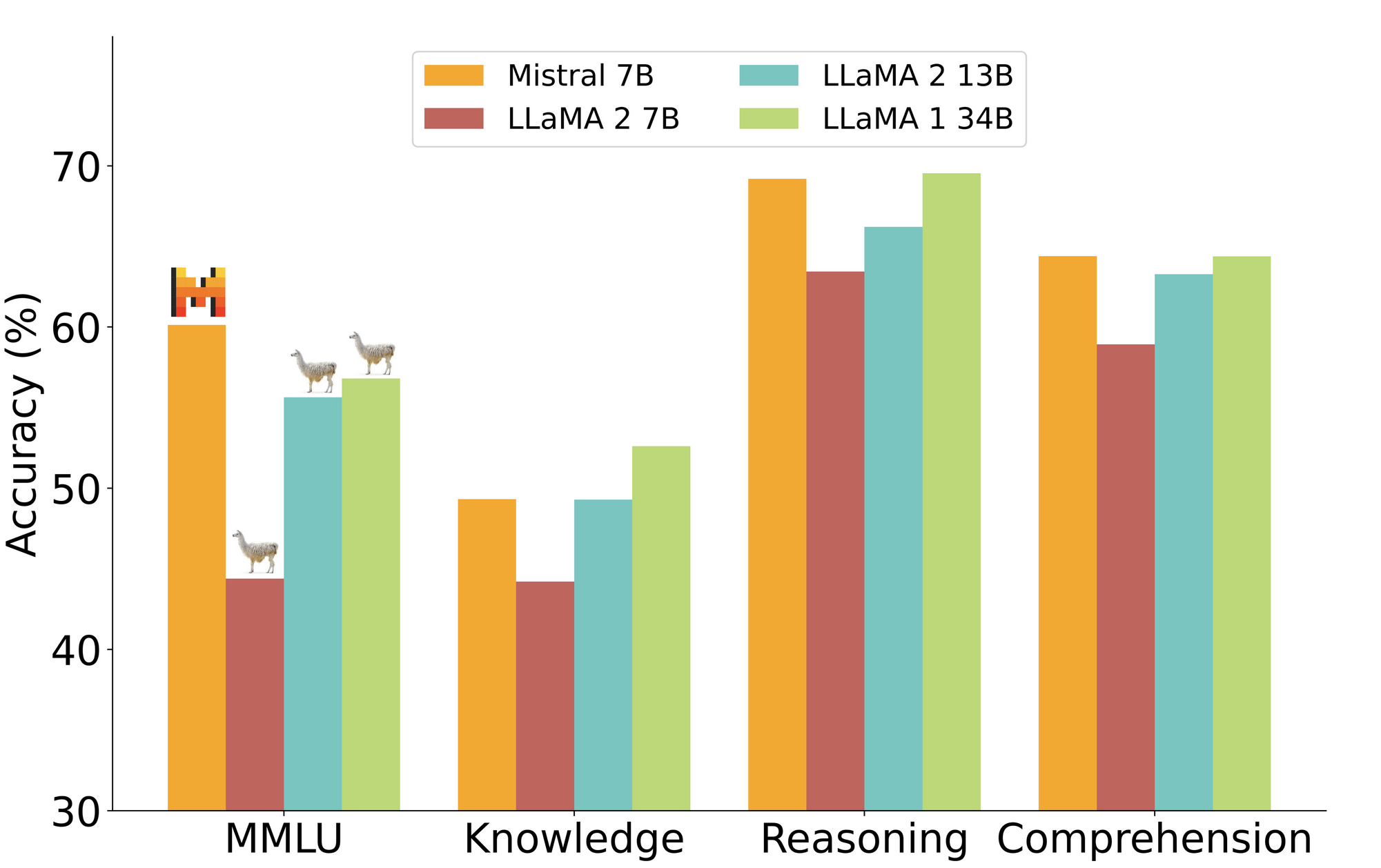

13. Mistral 7B

Mistral 7B is a powerful language model that claims to outperform LLaMa 1/2 in various ways. The large language model is capable of math, reasoning, word knowledge, QA, and more.

It is a pre-trained generative text model with 7 billion parameters. Hence, it does not have any moderation mechanisms. So, you need to fine-tune it to output moderated results.

💡

• Parameters: 7 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use

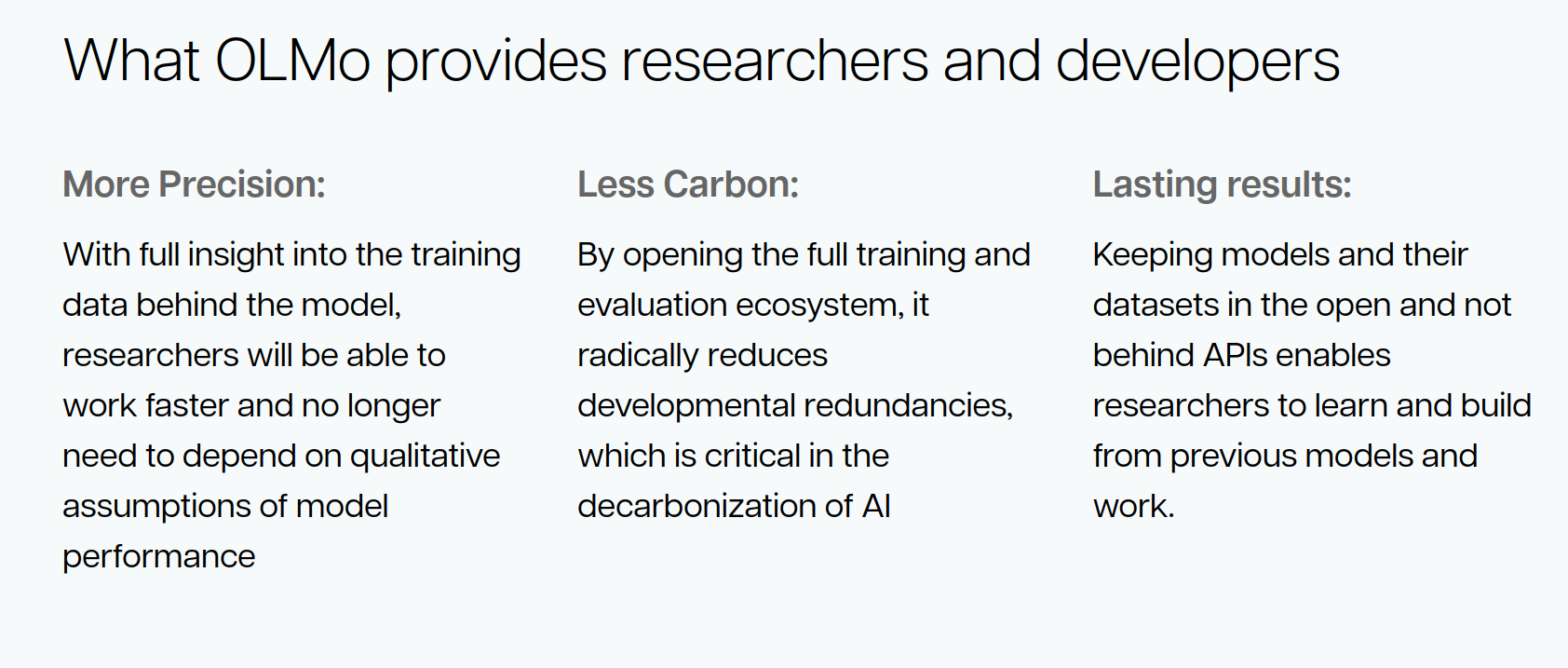

14. OLMo-7B

OLMo-7B is yet another open language model pre-trained with Dolma dataset featuring three trillion token open corpus. The entire training data, model weights, and the code is open-source, and available to use without restriction.

💡

• Parameters: 7 billion

• License: Apache 2.0

• Usage: Allowed for research and commercial use

💬 What is your favorite open-source LLM? Have you tried running any of them? Share your thoughts in the comments below!

![GNOME’s Very Own “GNOME OS” is Not a Linux Distro for Everyone [Review]](https://linuxpunx.com.au/wp-content/uploads/2021/04/gnomes-very-own-gnome-os-is-not-a-linux-distro-for-everyone-review-768x432.png)